January 21, 2020

Visual Search for Part Recognition ArrivesVisual Search for Part Recognition Arrives

One of the most challenging experiences in retail is shopping for parts. Whether you’re walking into a home improvement store looking for a 1.75-inch hex bolt, replacing a headlamp bulb in your car, or even finding the right missing Lego brick to complete a replica of the Millennium Falcon, it’s like searching for a needle in a haystack at most retailers.

The problem comes down to being able to identify the specific features and attributes of the part in question, and then being able to track it down amidst the thousands of part SKUs many retailers carry. This isn’t just an issue for customers looking for replacement parts; it’s just as much of a headache for store associates. Helping customers find parts is such a pain point that many store employees will simply avoid the most SKU-dense aisles, like the Fastener aisle at many home improvement stores.

Outside of the customer interactions, the part-recognition quandary rears its head in other places: looking up parts without barcodes at point-of-sale is a regular, time-sucking task; decontaminating bays where the parts have been misplaced in the wrong bins and bays is a daily to-do; and enabling car mechanics to find the right replacement part when they’re working on an engine block is an especially tough obstacle.

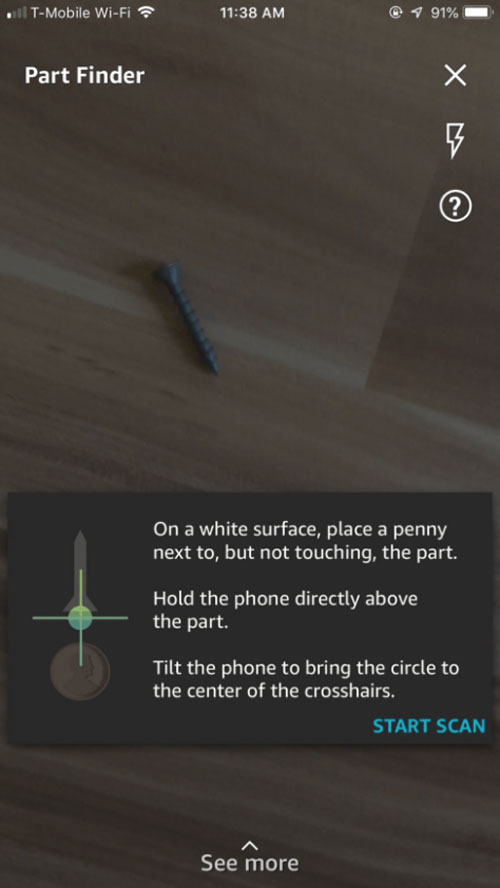

Visual search, the technology that identifies products from photos, has long been thought to be an intriguing solution to the part-recognition challenge. Everyone from Amazon to Ferguson has invested in technology designed to enable customers and employees to find parts with their camera. Only, you’ve likely not seen one of these solutions in the market yet. For a short 1-month period, Amazon added a Part Finder mode to their visual search camera, but soon after removed it. While Amazon hasn’t publicly commented on the reversal, testing of the feature was cumbersome and limited. Users needed to scan a part with a coin as a reference object for size, and even if you got over this hurdle, the feature only ID’d a small number of screws and nuts.

Amazon’s short-lived Part Finder Camera. Visual search for parts, it turns out, is more challenging than the technology is for, say, fashion or furniture. The reason comes down to training data. With fashion retail, visual search players ranging from Amazon to Pinterest to Slyce have been able to mine a rich trove of product photography to train deep-learning models to recognize both the features and tags associated with products, as well as overall image similarity. This enables camera search, as well as numerous other visual search applications like finding visually similar products when something is out of stock, or enriching product metadata to improve SEO and search. But the same visual-search experiences have been a challenge for part recognition — mainly due to the dearth of imagery of the parts.

It would be tempting to jump to the solution of simply photographing all parts. However, early work in visual search for parts quickly revealed issues with this approach. For starters, smaller parts can have minute differences in size, or attributes like thread count and finish. Even if a retailer had a part’s length and width in their metadata, these specifications might not match the end-to-end dimensions of the actual part but rather the size of smaller piece (think the shaft of a hex bolt). What was needed was a highly constrained environment, where the products could be photographed with ideal lighting to illuminate the product features and reduce any penumbral shadows, and where multiple measurements could be collected from a fixed camera.

Enter the Part Finder Kiosk, one of the first devices created to capture distributed training data of parts. The device, unveiled at the National Retail Federation’s 2020 Expo, enables a part retailer to quickly capture imagery of a part from multiple angles and orientations, and dynamically add it to a metric-learning visual search model to essentially teach the system to recognize new parts. The device is compact enough to enable the product photography to take place in warehouses and stores, so that parts don’t need to be shipped to a remote studio.

Even more valuable than its ability to collect imagery and training data is the kiosk’s recognition mode. Customers and store associates can place any part that has been trained into the device’s lightbox and get an instant, exact-match recognition of the part. Home Depot utilizes this kiosk, branded “Drop & Find”, in their stores to aid customers and store associates in identifying fasteners and finding the exact bay and bin they are located. With 90%-exact match on more than 5,000 fasteners sold in store, it is helping solve the age-old problem for customers, and giving the retailer extraordinary customer satisfaction scores.

NAPA Auto Parts is another retailer leveraging visual search for parts. Automotive parts come in a wide variety of sizes, though. So while the Part Finder Kiosk helps deliver NAPA know-how for many SKUs sold in store, NAPA is also leveraging visual search technology via mobile to help customers, store associates and auto technicians to quickly find and order replacement parts.

Similar to NAPA, Industrial companies ranging from Bosch to Daimler have begun utilizing mobile part recognition within their factories and warehouses. Typically the technology is integrated as a camera mode, where the end user snaps a photograph of a part to either find an exact match or a candidate part set. Similar to the kiosk solution, the most effective mobile solutions have required the collection of part training data to seed the models. Visual search software providers typically have an application that the retailer or industrial-part company can use to photograph the parts from a variety of angles. In addition to photographing the parts in isolation, it is common to also photograph the parts in situ (whether in the undercarriage of a car, in a manufacturing machine, or inside an oil rig) in order to create training data for a visual search index specific to that environment.

While it is still early days for part recognition, the technology is now delivering the same level of accuracy that visual search for fashion and furniture reached only two years ago. And just as visual search for fashion quickly became table stakes for most apparel retailers, it is sure to see similar rapid adoption among home improvement, auto, and industrial companies. Early adopters are initially embracing the mobile and in-aisle kiosk solutions. But soon, expect to see part recognition at point-of-sale and on assembly lines. Anywhere a customer or employee encounters the challenge of figuring “what is this thing” is fair game.